BIG-O Notation in Programming

Hey there, Arin here

Happy Coding,

In this Blog we'll Discuss about Big-O notations in Coding/Programming, Don't get intimidated it's really very easy to understand and implement,- What is Big-O Notation?

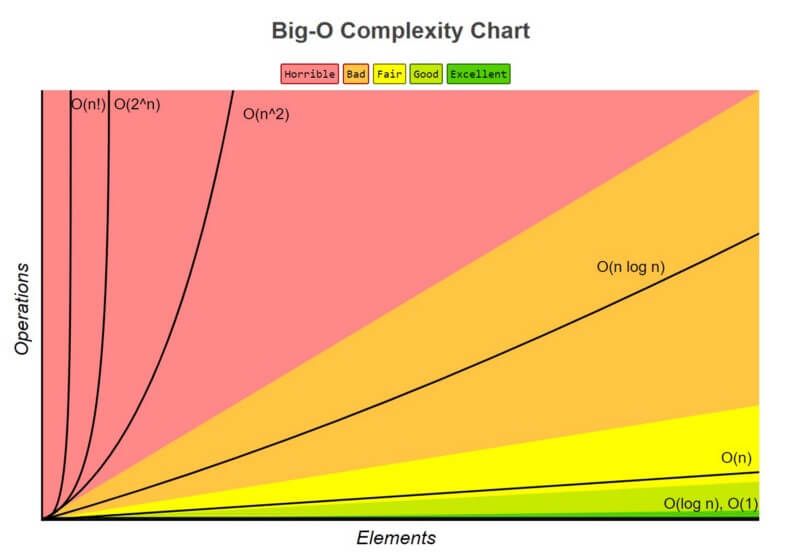

The Big O notation is used to express the upper bound of the runtime of an algorithm and thus measure the worst-case time complexity of an algorithm.

Now this is the standard definition given but shortly, It analyses and calculates the time and amount of memory required for the execution of an algorithm for an input value.

Basically it's like a system which checks the performance of any piece of code/program.

There are different types of Big O notations

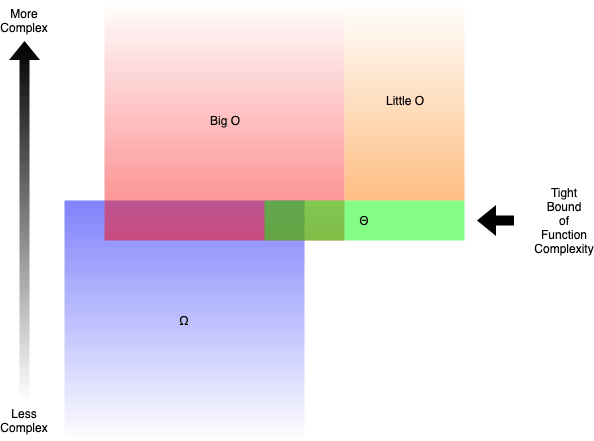

- Big O: “f(n) is O(g(n))” if for some constants c and N₀, f(N) ≤ cg(N) for all N > N₀

- Omega: “f(n) is Ω(g(n))” if for some constants c and N₀, f(N) ≥ cg(N) for all N > N₀

- Theta: “f(n) is Θ(g(n))” if f(n) is O(g(n)) and f(n) is Ω(g(n))

- Little O: “f(n) is o(g(n))” if f(n) is O(g(n)) and f(n) is not Θ(g(n))

In Really Simple Words,

- Big O (O()) describes the upper bound of the complexity.

- Omega (Ω()) describes the lower bound of the complexity.

- Theta (Θ()) describes the exact bound of the complexity.

- Little O (o()) describes the upper bound excluding the exact bound.

Don't get Scared with these Words, you can remember this by a graph

At All Times never forget this Graph about O notations

Comments

Post a Comment